I'll be using the hardware configuration discussed in part 1 of this series along with Windows 8.1 (64 bit) as my guest operating system. Both my EVGA GTX 750 and Radeon HD8570 OEM support UEFI as determined here, and I'll cover the details unique to each. Each GPU is connected via HDMI to a small LED TV, which will also be my primary target for audio output. Perhaps we'll discuss in future articles the modifications necessary for host-based audio.

The first step is to start virt-manager, which you should be able to do from your host desktop. If your host doesn't have a desktop, virt-manager can connect remotely over ssh. We'll mostly be using virt-manager for installation and setup, and maybe future maintenance. After installation you can set the VM to auto start or start and stop it with the command line virsh tools. If you've never run virt-manager before, you probably want to start with configuring your storage and networking Either by Edit->Connection Details or right click on the connection and selecting Details in the drop-down. I have a mounted filesystem setup to store all of my VM images and NFS mounts for ISO storage. You can also configure full physical devices, volume groups, iSCSI, etc. Volume groups are another favorite of mine to be able to have full flexibility and performance. libvirt's default will be to create VM images in /var/lib/libvirt/images, so it's also fine if you simply want to mount space there.

Next you'll want to click over to the Network Interfaces tab. If you want the host and guest to be able to communicate over the network, which is necessary if you want to use tools like Synergy to share a mouse and keyboard, then you'll want to create a bridge device and add your primary host network to the bridge. If host-guest communication is not important for you, you can simply use a macvtap connection when configuring the VM networking. This may be perfectly acceptable if you're creating a multi-seat system and providing mouse and keyboard via some other means (USB passthrough for host controller assignment).

Now we can create our new VM:

My ISOs are stored on an NFS mount that I already configured, so I use the Local install media option. Clicking Forward brings us to the next dialog where I select my ISO image location and select the OS type and version:

This allows libvirt to pre-configure some defaults for the VM, some of which we'll change as we move along. Stepping forward again, we can configure the VM RAM size and number of vCPUS:

For my example VM I'll use the defaults. These can be changed later if desired. Next we need to create a disk for the new VM:

The first radio button will create the disk with an automatic name in the default storage location, the second radio button allows you to name the image and specify the type. Generally I therefore always select the second option. For my VM, I've created a disk with these parameters:

In this case I've created a 50GB, sparse, raw image file. Obviously you'll need to size the disk image based on your needs. 50GB certainly doesn't leave much room for games. You can also choose whether to allocate the entire image now or let it fault in on demand. There's a little extra overhead in using a sparse image, so if space saving isn't a concern, allocate the entire disk. I would also generally only recommend a qcow format if you're looking for the space saving or snap-shotting features provided by qcow. Otherwise raw provide better performance for a disk image based VM

On the final step of the setup, we get to name our VM and take an option I generally use (and we must use for OVMF), and select to customize the VM before install:

Clicking Finish here brings up a new dialog, where in the overview we need to change our Firmware option from BIOS to UEFI:

If UEFI is not available, your libvirt and virt manager tools may be too old. Note that I'm using the default i440FX machine type, which I recommend for all Windows guests. If you absolutely must use Q35, select it here and complete the VM installation, but you'll later need to edit the XML and use a wrapper script around qemu-kvm to get a proper configuration until libvirt support for Q35 improves. Select Apply and move down to the Processor selection:

Here we can change the number of vCPUs for the guest if we've had second thoughts since our previous selection. We can also change the CPU type exposed to the guest. Often for PCI device assignment and optimal performance we'll want to use host-passthrough here, which is not available in the drop down and needs to by typed manually. This is also our opportunity to change the socket/core configuration for the VM. I'll change my configuration here to expose 4 vCPUs as a single socket, dual-core with threads, so that I can later show vCPU pinning with a thread example. There is pinning configuration available here, but I tend to configure this by editing the XML directly, which I'll show later. We can finish the install before we worry about that.

The next important option for me is the Disk configuration:

As shown here, I've changed my Disk bus from the default to VirtIO. VirtIO is a paravirtualized disk interface, which means that it's designed to be high performance for virtual machines. (EDIT: for further optimization using virtio-scsi rather than virtio-blk, see the comments below) Unfortunately Windows guests do not support VirtIO without additional drivers, so we'll need to configure the VM to provide those drivers during installation. For that, click the Add Hardware button on the bottom left of the screen. Select the Storage and just as we did with the installation media, locate the ISO image for the virtio drivers on your system. The latest virtio drivers can be found here. The dialog should look something like this:

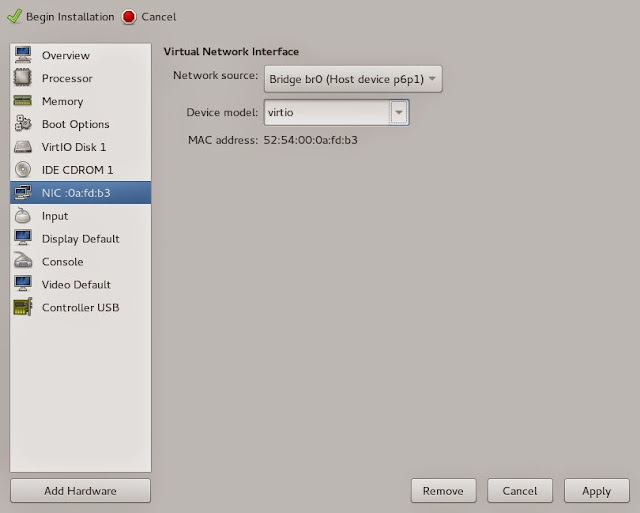

Click Finish to add the CDROM. Since we're adding virtio drivers anyway, let's also optimize our VM NIC by changing it to virtio as well:

The remaining configuration is for your personal preference. If you're using a remote system and do not have ssh authorized keys configured, I'd advise changing the Display to use VNC rather than Spice, otherwise you'll need to enter your password half a dozen times. Select Begin Installation to... begin installation.

In this configuration OVMF will drop to an EFI Shell from which you can navigate to the boot executable:

Type bootx64 and press enter to continue installation and press a key when prompted to boot from the CDROM. I expect this would normally happen automatically if we hadn't added a second CDROM for virtio drivers.

At this point the guest OS installation should proceed normally. When prompted for where to install Windows, select Load Driver, navigate to your CDROM, select Win8, AMD64 (for 64 bit drivers). You'll be given a list of several drivers to choose from. Select all of the drivers using shift-click and click Next. You should now see your disk and can proceed with installation. Generally after installation, the first thing I do is apply at least the recommended updates and reboot the VM.

Before we shutdown to reconfigure, I like to install TightVNC server so I can have remote access even if something goes wrong with the display. I generally do a custom install of TightVNC, disabling client support, and setting security appropriate to the environment. Make note of the IP address of the guest and verify the VNC connection works. Shutdown the VM and we'll trim it down, tune it further, and add a GPU.

Start with the machine details view in virt-manager and remove everything we no longer need. That includes the CDROM devices, the tablet, the display, sound, serial, spice channel, video, virtio serial, and USB redirectors. We can also remove the IDE controller altogether now. That leaves us with a minimal config that looks something like this:

We can also take this opportunity to do a little further tuning by directly editing the XML. On the host, run virsh edit <domain> as root or via sudo. Before the <os> tag, you can optionally add something like the following:

<memoryBacking>

<hugepages/>

</memoryBacking>

<cputune>

<vcpupin vcpu='0' cpuset='2'/>

<vcpupin vcpu='1' cpuset='3'/>

<vcpupin vcpu='2' cpuset='6'/>

<vcpupin vcpu='3' cpuset='7'/>

</cputune>

The <memoryBacking> tag allows us to specify huge pages for the guest. This helps improve the VM efficiency by skipping page table levels when doing address translations. In order for this to work, you must create sufficient huge pages on the host system. Personally I like to do this via kernel commandline by adding something like hugepages=2048 in /etc/sysconfig/grub and regenerating the initramfs as we did in the previous installment of this series. Most processors will only support 2MB hugepages, so by reserving 2048, we're reserving 4096MB worth of memory, which is enough for the 4GB guest I've configured here. Note that transparent huge pages are not effective for VMs making use of device assignment because all of the VM memory needs to be allocated and pinned for the IOMMU before the VM runs. This means the consolidation passes used by transparent huge pages will not be able to combine pages later.

As advertised, we're also configuring CPU pinning. Since I've advertised a single socket, dual-core, threaded processor to my guest, I pin to the same configuration on the host. Processors 2 & 3 on the host are the 2nd and 3rd cores on my quad-core processor and processors 6 & 7 are the threads corresponding to those cores. We can determine this by looking at the "core id" line in /proc/cpuinfo on the host. It generally does not make sense to expose threads to the guest unless it matches the physical host configuration, as we've configured here. Save the XML and if you've chosen to use hugepages remember to reboot the host to make the new commandline take effect or else libvirt will error starting the VM.

I'll start out assigning my Radeon HD8570 to the guest, so we don't yet need to hide any hypervisor features to make things work. Return to virt-manager and select Add Hardware. Select PCI Host Device and find your graphics card GPU function for assignment in the list. Repeat this process for the graphics card audio function. My VM details now look like this:

Start the VM. This time there will not be any console available via virt-manager, the display should initialize and you should see the TianoCore boot splash on the physical monitor connected to the graphics card as well as the Windows startup. Once the guest is booted, you can now reconnect to it using the guest-based VNC server.

At this point we can use the browser to go to amd.com and download driver software for our device. For AMD software it's recommended to specify the driver for your device using the drop down menus on the website rather than allowing the tools to select a driver for you. This holds true for updates via the runtime Catalyst interface later as well. Allowing driver detect often results in blue screens.

At some point during the download, Windows will probably figure out that its hardware changed and switch to it's builtin drivers for the device and the screen resolution will increase. This is a good sign that things are working. Run the Catalyst installation program. I generally use an Express Installation, which hopefully implies that Custom Installations will also work. After installation completes, reboot the VM and you should now have a fully functional, fully graphics accelerated VM.

The GeForce card is nearly as easy, but we first need to work around some of the roadblocks Nvidia has put in place to prevent you from using the hardware you've purchased in the way that you desire (and by my reading conforms to the EULA for their software, but IANAL). For this step we again need to run virsh edit on the VM. Within the <features> section, remove everything between the <hyperv> tags, including the tags themselves. In their place add the following tags:

<kvm>

<hidden state='on'/>

</kvm>

Additionally, within the <clock> tag, find the timer named hypervclock, remove the line containing this tag completely. Save and exit the edit session.

We can now follow the same procedure used in the above Radeon example, add the GPU and audio function to the VM, boot the VM and download drivers from nvidia.com. As with AMD, I typically use the express installation. Restart the VM and you should now have a fully accelerated Nvidia VM.

For either GPU type I highly suggest continuing with following the instructions in my article on configuring the audio device to use Message Signaled Interrupts (MSI) to improve the efficiency and avoid glitchy audio. MSI for the GPU function is typically enabled by default for AMD and not necessarily a performance gain on Nvidia due to an extra trap that Nvidia takes through QEMU to re-enable the MSI via a PCI config write.

Hopefully you now have a working VM with a GPU assigned. If you don't, please comment on what variants of the above setup you'd like to see and I'll work on future articles. I'll re-iterate that the above is my preferred and recommended setup, but VGA-mode assignment with SeaBIOS can also be quite viable provided you're not using Intel IGD graphics for the host (or you're willing to suffer through patching your host kernel for the foreseeable future). Currently on my ToDo list for this series is possibly a UEFI install of Windows 7 (if that's possible), a VGA-mode example by disabling IGD on my host, using host GTX750 and assigning HD8570. That will require a simple qemu-kvm wrapper script to insert the x-vga=on option for vfio-pci. After that I'll likely do a Q35 example with a more complicated wrapper, unless libvirt beats me to adding better native support for Q35. I will not be doing SLI/Crossfire examples as I don't have the hardware for it, there's too much proprietary black magic in SLI, and I really don't see the point of it given the performance of single card solutions today. Stay tuned for future articles and please suggest or up-vote what you'd like to see next.

Hopefully you now have a working VM with a GPU assigned. If you don't, please comment on what variants of the above setup you'd like to see and I'll work on future articles. I'll re-iterate that the above is my preferred and recommended setup, but VGA-mode assignment with SeaBIOS can also be quite viable provided you're not using Intel IGD graphics for the host (or you're willing to suffer through patching your host kernel for the foreseeable future). Currently on my ToDo list for this series is possibly a UEFI install of Windows 7 (if that's possible), a VGA-mode example by disabling IGD on my host, using host GTX750 and assigning HD8570. That will require a simple qemu-kvm wrapper script to insert the x-vga=on option for vfio-pci. After that I'll likely do a Q35 example with a more complicated wrapper, unless libvirt beats me to adding better native support for Q35. I will not be doing SLI/Crossfire examples as I don't have the hardware for it, there's too much proprietary black magic in SLI, and I really don't see the point of it given the performance of single card solutions today. Stay tuned for future articles and please suggest or up-vote what you'd like to see next.

Hi Alex,

ReplyDeletegreat article, thanks!

It confirmed that I have everything set up correctly, thanks to your previous posts :)

Minor nitpick: Wouldn't it be better to have a scsi-controller of type "VirtIO SCSI" and make the disk use the scsi bus? That makes it use virtio-scsi instead of virtio-blk, which should be better. Assuming I'm reading the 3 dozen wiki pages with scattered information on all this correctly.

At least virtio-scsi let's the guest do trim commands to cut the image down to a sparse file when you delete things in the guest, I haven't been able to do that with virtio-blk.

Source: http://wiki.qemu.org/Features/VirtioSCSI

Delete(I'm not even sure why I spent so much time looking at all the disk performance optimizations, my games are on a samba share anyway...)

Yes, that's a good suggestion. In fact I use virtio-scsi for my Steam VM, but I hadn't yet figured out how to create it via virt-manager and overlooked it while writing this. It looks like the additional steps would be rather than simply setting the disk to virtio, to do:

DeleteAdd Hardware -> Controller -> SCSI -> VirtIO SCSI

Then rather than changing the Disk Bus to Virtio, it should be changed to SCSI. This modification can be made during installation or after. Another performance tuning option available with virtio-scsi, and the reason I use it, is multi-queue support. To enable this, use virsh edit and find the virtio-scsi controller definition block. Within that, add:

Where I've read that the number of queues can be up to the vCPUs configured for the VM.

Blogger ate my XML, that blank spot should read:

Delete<driver queues='4'/>

Hmm, I spoke too soon, Windows goes into repair mode if the disk is changed to virtio-scsi, so it's best to either set it during install or add a second dummy disk using virtio-scsi, install the drivers, then switch the main disk to virtio-scsi.

DeleteFun times to be had ;-)

DeleteNot as fun as Windows 10 though, that gives me a bluescreen right after "Press any key to install..". Not vfio related though, so slightly off-topic.

Except if you also tried and had more luck?

Hi Alex, thanks for the series. What's going on with this: "I'd advise changing the Display to use VNC rather than Spice, otherwise you'll need to enter your password half a dozen times"? You can use Spice without password.

ReplyDeleteYou skipped the first part of that sentence, "If you're using a remote system and do not have ssh authorized keys configured,..." If you know how to setup remote access w/ Spice without passwords, please share. I have it working with ssh authroized_key, but not everybody wants to set that up when they're going to remove the Spice connection after install anyway.

Delete>UEFI install of Windows 7 (if that's possible)

ReplyDeleteIt's very simple, you can either use QXL or inject proper autounattend.xml into windows iso for completely headless install.

Hi,

ReplyDeleteIn the past, people have said that for AMD Radeon Cards you need to have a Windows Shutdown script that unmounts the card. Then on Windows Start, the script mounts the card. This was because Radeon Cards didn't reset correctly on a VM restart, but only on a Host restart. Is this no longer needed as it's not mentioned in this part?

Thanks

As far as I'm aware, the reset problems on AMD cards is limited to Bonaire and Hawaii based cards. A workaround for this went into QEMU after the 2.3 release. It's not 100% effective, but it provides substantially improved behavior compared to previous. See commit 5655f931abcf in qemu.git for this workaround. Also, since kernel v4.0 (d84f31744643) we ignore PM reset capabilities of AMD cards since they do not perform the advertised soft reset. This improves the behavior of cards like Oland. There are also AMD GPUs that don't require any special handling, Pitcairn is one that works well without any quirks.

DeleteThanks for your fast response. I thought it was with all Radeon Cards. It's good to know that work is being done on this. I have been debating for a few months now as to which card to get. I love nVidia cards, but their insistence on trying to keep their cards from working in a VM is a major put off. I'm afraid if I went the nVidia route, I would be left with a broken system with a future update, but Radeon cards are just not as stable. It really is a gamble to spend big money on a card, then later it won't work.

DeleteI tried adding:

ReplyDeleteTo which the system said:

error: unsupported configuration: unexpected feature 'kvm'

Failed. Try again? [y,n,f,?]:

The missing bits are the bracketed kvm and hidden state tags. Guess the editor didn't like.

DeleteClearly you need newer libvirt.

DeleteYeah... looks like doing this on Linux Mint or Ubuntu will NOT work, as newer versions aren't available, not even in PPAs.

DeleteOff to Fedora land, then! Thanks.

Hi,

ReplyDeleteI have a strange problem.

When I try to install Windows 8.1 with your above instructions, it fails with the transfering files thing right after selecting what drive to use. It goes from 0% to blue screen.

I rebooted the virtual machine, but chose an OpenSuse install DVD, so the exact same machine, and it worked perfectly.

Do you know what's going on?

Thanks,

Jon

Use a pre-July build of OVMF, there's a bug upstream that's breaking Windows installs.

DeleteAh. Thanks, but I got my build here:

Deletehttps://www.kraxel.org/repos/jenkins/edk2/

Would anyone happen to have previous builds?

Thanks,

Jon

Try the latest 20150726 build from that repo, rumor has it that it includes the fix.

DeleteSadly that version didn't work either. I went through the commit history tried the July 12th commit: d5b5b8f8aa956266289ad9c523a410419fea87f8

DeleteThis one works. It's also using the new OpenSSL. The first one I tried from the RPM repo was the 16th, I think, so sometime between the 12th and 16th, something broke windows.

I made a script to fetch everything with that commit, patch, compile, and install so I all I had to do was try different commits until one worked with Windows 8.1. :) I can post the script if anyone wants it.

Jon

Can you post the commit that worked for you, Jon? Or the script?

DeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

DeleteAlex, I think it would be helpful to mention, that there is also one useful kernel parameter for host machine to isolate VMs CPUs from host. It is 'isolcpus'. Its syntax is like libvirt CPU list. With this one you may guarantee, that host machine will not consume CPU time of guest VMs. But isolated CPUs may still be used when process affinity is set to use them.

ReplyDeleteI'm having a bit of trouble with the passthrough part. Running Fedora 22, kernel 4.1.4 (no patches, no change to config) booted via UEFI. I installed Windows 8.1 successfully, however when I add in the PCI devices (AMD Tahiti XL gpu + sound) the video output from the card never activates, the host never gets past bios and the CPU pegs at 12% (running 8 cores, this is why I figure it never makes it past the bios).

ReplyDeleteHere are the (likely) relevant areas of my XML configuration:

I can pass in ordinary devices (passed in an audio pci device without issue) however as soon as I try for the video card, the above happens. I am using the vfio-pci option disable_vga=1 from part 3 as well as iommu=pt for the kernel.

<domain type='kvm' xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'>

<name>windows-8.1-x64</name>

<uuid>xxx</uuid>

<memory unit='KiB'>2097152</memory>

<currentMemory unit='KiB'>2097152</currentMemory>

<vcpu placement='static'>8</vcpu>

<sysinfo type='smbios'>

<bios>

<entry name='vendor'>American Megatrends Inc.</entry>

...

</bios>

<system>

<entry name='manufacturer'>GIGABYTE</entry>

...

</system>

</sysinfo>

<os>

<type arch='x86_64' machine='pc-i440fx-2.3'>hvm</type>

<loader readonly='yes' type='pflash'>/usr/share/edk2.git/ovmf-x64/OVMF_CODE-pure-efi.fd</loader>

<nvram>/var/lib/libvirt/qemu/nvram/windows-8.1-x64_VARS.fd</nvram>

<smbios mode='sysinfo'/>

</os>

...

<cpu mode='host-passthrough'>

<topology sockets='1' cores='4' threads='2'/>

</cpu>

...

<devices>

<emulator>/usr/bin/qemu-kvm</emulator>

...

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x01' slot='0x00' function='0x1'/>

</source>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</hostdev>

<memballoon model='virtio'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/>

</memballoon>

</devices>

<qemu:commandline>

<qemu:arg value='-acpitable'/>

<qemu:arg value='file=/usr/share/libvirt/firmware/GIGABYTE/acpi/tables/MSDM'/>

<qemu:arg value='-acpitable'/>

<qemu:arg value='file=/usr/share/libvirt/firmware/GIGABYTE/acpi/tables/SLIC'/>

</qemu:commandline>

</domain>

The vfio-users mailing list is a better option for support than comments here. https://www.redhat.com/mailman/listinfo/vfio-users

DeleteI've got two card that are the same modle so when IItell pci-stub to take one of the card the other card gets clammed by pci-stub too. How can I get around tgis ? Is there slmw way I can change the device I'd maybe of the second card?

ReplyDeletePart 3 covered exactly this topic.

DeleteSorry about that I managed to some how skip pasted that. Do you know where ubuntus "modprobe.d" file is located? For setting up driver overrides.

DeleteI do not have the option to select the Firmware, and Chipset isn't even present. I installed GEMU, KVM, libvirt and virt-manager via apt-get, so it should be recent enough (it installs libvirt 1.2.12. If this isn't recent enough you should give specific instructions on how to install the correct version).

ReplyDeletehttp://i.imgur.com/DHj67ga.png

Obviously your virt-manager is not recent enough

Delete"You should already have your distribution packages installed for QEMU/KVM and libvirt, including virt-manager".

DeleteWere did you get these then if the ones provided via apt-get are not recent enough?

I removed virt-manager and looked for alternate ways to install it from the official site, and ended installing it again the same way to check the version. After reinstalling it I got an error saying it could not find the virtinst module, so I removed all the other libvirt packages to reinstall everything. After rebooting, my eth1 no longer shows up on ifconfig, and I can not longer connect to the internet (This seems to be caused by setting up the bridge before starting the process to create the VM).

Would it be possible to contact you over IRC or skype to work out these issues?

"For my setup I'm using a Fedora 21 system with the virt-preview yum repos to get the latest QEMU and libvirt support along with Gerd Hoffmann's firmware repo for the latest EDK2 OVMF builds."

Deletehttp://vfio.blogspot.com/2015/05/vfio-gpu-how-to-series-part-3-host.html

Ok I see what happened. The blog archive on the right side of the page (in may) goes from Step 2 to step 4, with step 3 being under June. The question is now how do I fix my ethernet?

DeleteYes, I made a correction in part 3 that broke the timestamp in blogger. Sorry. As for your Ethernet, file a bug with your distro.

DeleteThe ethernet was broken by virt-manager or libvirt. filling a bug will just get me a "third party software not our problem".

DeleteI have libvirt 1.2.19, virt-manager 1.2.1 and KVM 2.2.0 installed. However, UEFI is not available in firmware.

ReplyDeletehttp://i.imgur.com/O4ypOTX.png

"Libvirt did not detect any UEFI/OVMF firmware image installed on the host." is what the yellow triangle says.

DeleteSee the nvram= option in /etc/libvirt/qemu.conf for either where to put OVMF such that libvirt will find it or to specify where you have it installed.

Deletewhich specific rpm is needed from https://www.kraxel.org/repos/

DeleteI don't see anything in jenkins/seabios/ with a .fd extention (which is what the nvram example shows). Is it in a different directory?

https://www.kraxel.org/repos/jenkins/edk2/edk2.git-ovmf-x64-0-20150922.b1224.g2f71ad1.noarch.rpm

DeleteThis is what I have it set to, yet I am still not able to select UEFI for firmware:

Deletenvram = [

"/usr/share/edk2.git/ovmf-x64/OVMF_pure-efi.fd:/usr/share/edk2.git/ovmf-x64/OVMF_VARS-pure-efi.fd"

]

Hi, thanks to the information in your posts I am no longer dual-booting my desktop. But it seems to me that a regression regarding the virtualisation sneaked into the Linux kernel somewhere around the 4.2 release. The symptoms are: VMs with PCI devices assigned via vfio run extremely slow with very high CPU utilisation. Consequently, Windows cannot boot with the boot animation (the Windows logo with the rotating dots beneath it) running with one frame per 10 seconds and the OS reboots automatically after some time, even before reaching past the boot animation. The problem goes away if one removes the PCI devices from the VM configuration. After many hours of experiments, it turns out that the only solution in my case was to downgrade the kernel to 4.1.6-1-ARCH. I don't know if that is a generic Linux regression or something specific to the Arch Linux kernels, but the problem occurred after an upgrade to kernel 4.2.1-1-ARCH. Since I skipped the 4.2 version, I cannot tell for sure if the problem came with 4.2.1-1 or earlier. The hardware is: i7-5820K on a GB X99-UD4; the PCI device is a GTX 970 and its audio function.

ReplyDeleteMy R9 280 isn't working correctly I get very low fps. I ran dxdiag and under the display tab it says theres 7GB of vram even thought there is only 3GB of vram. If I go into GTA 5's graphics settings it says I only have 512MB of video memory. So what could I be doing wrong?

ReplyDeleteOh ya I checked for a cpu bottle neck its not it. Still same problem.

DeleteTurns out the 7GB its showing is the system memory. But i still have the problem with low fps.

DeleteThe graphics cards lack UEFI support in their ROM? Maybe the ROM is inaccessible/invalid and you need to pass a ROM via file?

ReplyDeleteI'd suggest moving to the vfio-users mailing list, the comments section here is not really conducive to this sort of thing.

ReplyDeleteAny ideas as to why I'm getting low fps in my VM. I've given my VM 8 cores to check if its a CPU problem but it had no effect.

ReplyDeleteI would also suggest the vfio-users list is the appropriate place to try to figure that out.

ReplyDeleteHello

ReplyDeleteI have a problem with GPU passthrough, i based on the instructions of the wiki arch, I've done everything as it was written there but not working as it should. I installed Windows 8.1 on a virtual machine that detects my GPU but displays an Error 43. I found a solution on the here. My GPU also has two direct outputs HDMI and DisplayPort, I had a flash VBIOS GPU with UEFI support. I use 3 different kernels, the standard Arch linux kernel 4.2.2-1, LTS kernel and VFIO patched kernel (from AUR). I also tried the i915 VGA arbitrator patch but it also did not help. My VM detects the card (without any problem) but when'm trying to run a game, nvidia driver just crash and the game shuts down.

Great article thanks.

ReplyDeleteQuick question, by installing the vm on a separated drive, will i buy able to plug the drive as external on my macbook and launch the vm from macos? (since osx supports qemu).

Will i have to setup things differently?

You could theoretically use the VM image, but OSX probably doesn't have KVM support and definitely doesn't have VFIO support, so you may have compatibility and feature issues.

DeleteAs long as it works well enough for me to boot the vm and download thing to update windows and get some games i'm ok with it

Deleteanyone having an issue when booting the vm and trying to install windows?

ReplyDeleteI'm currently trying to install win10 (after many struggles lol) and i got a System Thread Exception Not Handled after the windows logo, damn :/

if anybody got into the same error, it has to due with the type of processor you select for the vm, core2duo works just fine for me.

DeleteTrying to solve the black screen issue after install and will update on that (basically the vm recognizing to input so switch you to the second screen but there is not one)

Issue solved itself after some rebooting, have no idea why, windows mystery as usual

DeleteThis comment has been removed by the author.

ReplyDeleteSo i switched from centOs to fedora because there wasn't the support of the kvm feature (allowing to hide the kvm state) but the problem i ran into is that on fedora i cannot assign an usb host device through virt-manager, since the host is already using it, it will not free the device for the vm.

ReplyDeleteHow did you manage to assign your devices? I can do it using the qemu-kvm command line but i created the vm following your tutorial so i don't have a script to launch the vm :/

I'm struggling with this for 3days now, any help would be more than welcome :D

How to :

Deletehttp://stackoverflow.com/questions/33618040/cannot-assign-mouse-and-or-keyboard-to-vm/33668313#33668313

So it is done, some audio glitches to fix and setup to do but it is working and gaming is on.

setup :

MSI z87 g45 gaming

i7 4790k

KFA2 GeForce GTX 980 Ti HOF, 6 Go

Hi there,

ReplyDeleteUsing these steps I've managed to get VFIO GPU passthrough working (i7-4970k, Asus Maximus VII Hero, Nvidia GTX 780 ti, Fedora 23 host, WIndows 10 guest).

However one thing I've not managed to get working is SLI (I have two GTX 780 ti adapters installed).

I can passthrough both adapters, and Windows sees them both. They even both show up in the Nvidia Control Panel, but the option to enable SLI is missing.

Has anyone had any success with Nvidia SLI in a Windows guest?

Thanks,

This comment has been removed by the author.

ReplyDeleteProblem solved...

DeleteA stupid mistake, didn't plug in the power cable to GPU, which cause code 43 in Windows guest...

This comment has been removed by the author.

DeleteI am stuck at the EFI shell. I just get the Shell> and I don't know how to boot.

ReplyDeleteCould anyone point me in the right direction?

you mean when you install windows on the vm?

Deleteread the tutorial it is explain

I am stuck at the shell. I do not see FS0: in the device mappings. I followed the article in the Fedora wiki, reference bellow and installed a Fedora client that boots displays the startup on the second monitor. I am unable to proceed past the interactive shell.

DeleteReferences

(https://fedoraproject.org/wiki/Using_UEFI_with_QEMU#Install_a_Fedora_VM_with_UEFIence)

(https://fedoraproject.org/wiki/Using_UEFI_with_QEMU#Install_a_Fedora_VM_with_UEFI)

Having a problem with the NVIDIA passthrough. The XML is sending me an error that it can't validate against the schema "Extra element devices in interleave" and "Element domain failed to validate content". Checked domaincommon.rng and I have the hidden feature.

ReplyDeleteI tested by altering suspend-to-mem value to 'yes' and it gave me the same error message. Thought it could be permissions so I chmod 666 for domain and domaincommon but still no joy. Would really appreciate some help.

So instead of relying on validation I hit "i" to ignore and save the changes anyway. I added the GPU (980Ti) and it's corresponding sound. The screen is displayed, but it's stuck at the Windows loading screen (spinning dots). Just keeps spinning with no apparent progress.

DeleteUgh, edited the hidden feature out and tried again. Does the same thing. So clearly, the edit of the XML is not doing anything, and not utilizing the hidden feature so NVIDIA is blocking the passthrough still, resulting in the forever Windows load screen?

DeleteWhether or not this is related, but I didn't have the hypervclock nor the hyperv tags in my XML; just seems that I have to add the kvm tags...

I have followed all the steps outlined, and everything seems to be working, I see the card in Windows device manager as "3d Video Controller" but the Nvidia drivers refuse to detect and install it. Manually installing the driver using "Have Disk" option in Device Manger results in Windows claiming the driver is not compatible with the version of Windows (but it is?). Any advice on how to proceed?

ReplyDeleteHello, I've followed your tour to setup a vm of win8.1,using uefi+ovmf,but I could not get win8.1 installing cdrom booted,please help me.

ReplyDeletesnapshot of virt-manager:

http://xargs.cn/usr/uploads/2016/01/3874887049.png

It appears to me that Nvidia purposely cripples their cards, except for the "Multi-OS" Quadro cards they deem VT-d enabled. I have a GTX 960 card with a Xeon X5690 cpu, and I'm observing that the frame rate is consistently under 20 FPS for newer games such as Borderlands 2, while slightly older games such as Supreme Commander 2 will maintain a solid 60 FPS. The GTX 960 is normally capable of playing BL2 at max video specs, and I've verified that cpu is untaxed at levels under 20% usage and less than 15% physical ram used. Enabling MSI was the last optimization I had left to try for this. I'm going to try the Radeon HD 5850 to see how it fares, otherwise I suspect I will go for a higher end Radeon card since Nvidia seems relatively nonviable and unnecessarily difficult to optimize for game play.

ReplyDelete